In having a talk reviewed recently, it was suggested I spend more time defining some of the subject I touched on. It occurred if I should go over (or at least introduce) these ideas during a talk for a SQL Saturday audience, some might find a post on the subject useful. Hence my recent post on key lookups.

Another such topic is table variables. I use table variables frequently at my current job, but they came up very infrequently when I worked at CSS in Microsoft. I remember the conversations about them being very simple at the time, as in, “you should just use temp tables instead.” But there is a lot of utility with table variables, and they could be a useful arrow in your quiver.

Statistics

Table variables are much maligned for one issue; they don’t have statistics.

In most ways, table variables function a lot like temp tables. You can define their columns and insert rows into them. Scoping is different. If you declare a table variable inside a procedure, its scope ends when you return from that procedure. If you create a temp table inside a procedure, it remains for the connection, so the procedure that called the procedure creating the temp table can see the temp table and its contents.

Both can have indexes created on them, but the table variable will have no statistics on its indexes. The result is that the SQL Server optimizer doesn’t know how many rows are in the table variable and will assume 1 row when trying to compare possible execution plans. This may lead to worse plan than you would have with a temp table containing the same data.

This is mitigated in a feature of SQL Server 2019’s Intelligent Query Processor, table variable deferred compilation. This provides cardinality estimates based on the actual number of rows in the table variable, and statements are not compiled until their first execution.

“This deferred compilation behavior is identical to the behavior of temporary tables. This change results in the use of actual cardinality instead of the original one-row guess.”

Microsoft

This feature does require you to be using compatability level 150, but this is a significant upgrade.

Alternatively, if you want to change the behavior around a table variable and aren’t using SQL Server 2019 (or have another compatability mode), you could use a query hint. I’ve used an INNER LOOP JOIN hint many times when using a table variable I expect to have a small number of rows as a filter to find specific rows in a permanent table. This gives me the join order and join type I expect to see, but I’m less shy about hints than many as I have commented previously.

Tempdb contention

There’s an ocean of posts about how to address tempdb contention, mostly focusing on creating enough data files in your tempdb or enabling trace flags that change some behaviors. The point I think that gets understated, is that creating fewer objects in tempdb would also address the issue.

One of the ways table variables function like temp tables, is that they store data in tempdb and can drive contention there as well. The cause of that contention is different, but creating table variables across many different sessions can still cause contention.

Table Valued Parameters

TVPs allow us to pass a table variable to a procedure as an input parameter. Within the procedure, they function as read-only table variables. If the read-only part is an issue, you can always define a separate table variable within the procedure, insert the data from the TVP, then manipulate it as you see fit.

The caveats here are the same; the TVP is stored in tempdb (and can cause contention there), you may have issues with cardinality due to the lack of statistics.

But the advantage is that we can make procedure calls that operate on sets of data rather than in a RBAR fashion. Imagine your application needs to insert 50 rows into a given table. You could call a procedure with parameters for each field you are inserting, and then do that 49 more times. If you do it serially, that will take some time, in part because of the round trips from your application to the SQL Server. If you do this in parallel, it will take longer than it should because of contention on the table. I haven’t blogged about the hot page issue, but that might make a good foundation topic.

Or you could make one call to a procedure with a TVP so it can insert the 50 rows without the extra contention or delay. Your call.

Memory Optimized Table Variables

This changes this by addressing one of the above concerns. Memory optimized table variables (let’s say motv for short), store data in memory and don’t contribute to an tempdb contention. The removal of tempdb contention can be a huge matter by itself, but storing data in memory means accessing a motv is very fast. Go here if you’d like to read the official documentation about memory optimized table variables.

A motv has to have at least one index, and you can choose a hashed or nonclustered index. There’s an entire page on the indexes for motv’s, and more details for both index types on this page on index architecture.

I tend to always use nonclustered indexes in large part for the simplicity. Optimal use of hash indexes requires creating the right number of buckets, depending on the number of rows expected to be inserted into the motv. Too many or too few buckets both present performance issues. It seems unlikely to configure this correctly and then not have the code change that later. It’s too micromanage-y to me, so I’d rather use nonclustered indexes here.

We do need to keep in mind that we are trading memory usage for some of these benefits, as Eric Darling points out amusingly and repeatedly here. This shouldn’t matter the majority of the time, but we should keep in mind that it isn’t free and ask questions like:

- Does that field really need to be nvarchar(max)?

- I expect this INSERT SELECT statement to insert 100 rows, but could it be substantially more?

- What if there are 100 simultaneous procedures running, each with an instance of this motv? Each with 100 rows?

- How much total memory could this nvarchar(200) require if we’re really busy?

I certainly would want to keep my columns limited, and you can make fields nullable.

Memory Optimized Table Valued Parameters

So, we can memory optimize our TVP and get the advantages of both. I use these frequently, and one of the big reasons (and this applies to TVPs in general) is concurrency.

So, let’s compare inserting 50 rows of data into a physical table using a normal procedure versus a procedure with a memory optimized table variable.

Singleton Inserts

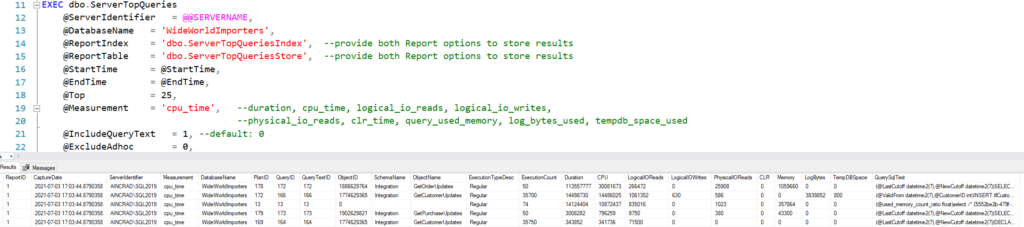

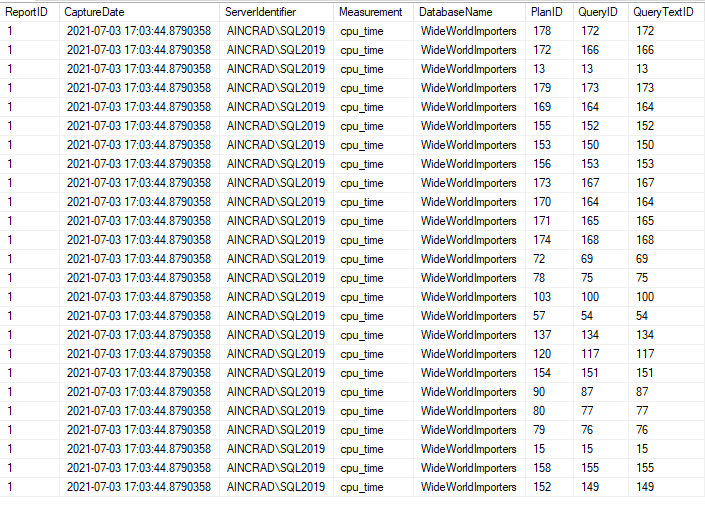

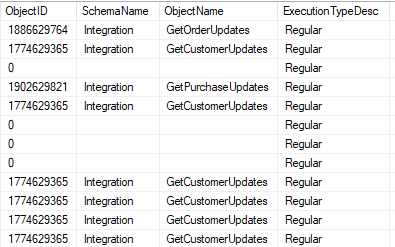

To test this, I used a tool provided in the World Wide Importers sample on github. Specifically, I was using the workload driver. This was intended to compare the performance of in-memory, permanent tables with on-disk tables, but the setup will work just fine for my purposes.

The VehicleLocation script enables In-Memory OLTP, creates a few tables, procedures to insert into those tables, and adds half a million rows to them. I’ll be using the OnDisk.VehicleLocations table and the OnDisk.InsertVehicleLocation to insert into it.

I wrote the following wrapper procedure to provide random inputs for that procedure:

USE WideWorldImporters

GO

CREATE OR ALTER PROCEDURE OnDisk.InsertVehicleLocationWrapper

AS

BEGIN

-- Inserting 1 record into VehicleLocation

DECLARE @RegistrationNumber nvarchar(20);

DECLARE @TrackedWhen datetime2(2);

DECLARE @Longitude decimal(18,4);

DECLARE @Latitude decimal(18,4);

SET NOCOUNT ON;

SET @RegistrationNumber = N'EA' + RIGHT(N'00' + CAST(CAST(RAND() AS INT) % 100 AS nvarchar(10)), 3) + N'-GL';

SET @TrackedWhen = SYSDATETIME();

SET @Longitude = RAND() * 100;

SET @Latitude = RAND() * 100;

EXEC OnDisk.InsertVehicleLocation @RegistrationNumber, @TrackedWhen, @Longitude, @Latitude;

END;

GO

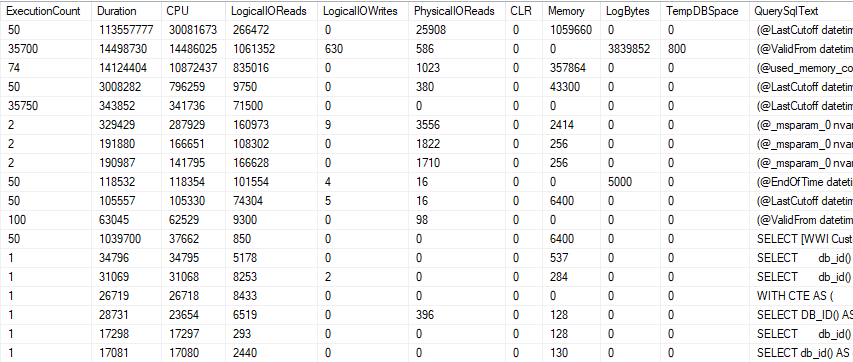

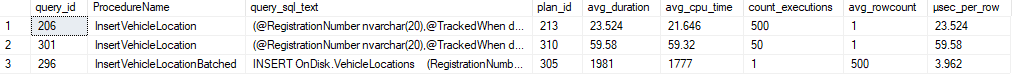

With this, I basically wrote a loop to call this to individually insert 500 rows. Query Store showed the procedure ran its one statement in 23 µs. I love measuring things in microseconds.

Parallel Inserts

Except that didn’t really test what I wanted. Running it in this fashion in SSMS, it would have run the procedure 500 times serially. I mentioned concurrency as an advantage for memory optimized TVPs, but to see that advantage we need to do singleton inserts into our table in parallel.

A little Powershell later, and I ran this again. I did only 50 inserts, but I did them using 50 parallel threads. Each thread will call the wrapper proc to generate random inserts, then call OnDisk.InsertVehicleLocation.

Since this table has clustered index based on an identity column, we will always be inserting into the last page on the table. But that will require an X lock to write the page, which means our parallel calls will be blocking each other. And the more there are, the worst the waiting will get. This is the hot page issue in a nutshell.

On average each execution took 59 µs. Nearly three times as long. Likely, the first thread completed just as fast as previous, but each subsequent thread has to get in line to insert into the OnDisk.VehicleLocation table. And the average duration increases steadily throughout.

Inserting with a Memory Optimized TVP

Here’s the script I wrote to test using a memory optimized TVP to insert 500 rows.

USE WideWorldImporters

GO

IF NOT EXISTS(

SELECT 1

FROM sys.types st

WHERE

st.name = 'VehicleLocationTVP'

)

BEGIN

CREATE TYPE OnDisk.VehicleLocationTVP

AS TABLE(

RegistrationNumber nvarchar(20),

TrackedWhen datetime2(2),

Longitude decimal(18,4),

Latitude decimal(18,4)

INDEX IX_OrderQtyTVP NONCLUSTERED (RegistrationNumber)

)WITH(MEMORY_OPTIMIZED = ON);

END;

GO

CREATE OR ALTER PROCEDURE OnDisk.InsertVehicleLocationBatched

@VehicleLocationTVP OnDisk.VehicleLocationTVP READONLY

AS

BEGIN

SET NOCOUNT ON;

SET XACT_ABORT ON;

INSERT OnDisk.VehicleLocations

(RegistrationNumber, TrackedWhen, Longitude, Latitude)

SELECT

tvp.RegistrationNumber,

tvp.TrackedWhen,

tvp.Longitude,

tvp.Latitude

FROM @VehicleLocationTVP tvp;

RETURN @@RowCount;

END;

GO

-- So let's insert 500 rows into this on-disk table using a TVP

DECLARE

@Target INT = 500,

@VehicleLocationTVP OnDisk.VehicleLocationTVP;

DECLARE @Counter int = 0;

SET NOCOUNT ON;

WHILE @Counter < @Target

BEGIN

-- Generating one random row at a time

INSERT @VehicleLocationTVP

(RegistrationNumber, TrackedWhen, Longitude, Latitude)

SELECT

N'EA' + RIGHT(N'00' + CAST(@Counter % 100 AS nvarchar(10)), 3) + N'-GL',

SYSDATETIME(),

RAND() * 100,

RAND() * 100;

SET @Counter += 1;

END;

EXEC OnDisk.InsertVehicleLocationBatched @VehicleLocationTVP;

GO

This creates the type for our memory optimized table variable and defines the procedure. The last batch generates 500 rows of random data, inserting them into the same type, and calls the new proc with the TVP as input. Executing this once to insert 500 rows took 1981 µs, which is just under 4 µs per row.

Wrapping up

This might have been my wordiest blog, but I hope you learned something from it. I’ve rewritten a number of procedures in recent years to operate on batches, and the results have largely been great.

Again, I will frequently use a join or index hint when joining the TVP to a base table, and that’s a minor change that can prevent an expensive mistake from the lack of statistics.

If you have any topics related to performance in SQL Server you would like to hear more about, please feel free to @ me and make a suggestion.

Please follow me on twitter (@sqljared) or contact me if you have questions.