In my most recent blog post, I looked at the Query Variation report, which compares the recent performance of queries versus their historical performance to either highlight improvements or regressions in performance. The Waits Variation component does the same, but comparing the recent waits for a query to its historical waits.

One thing to keep in mind, is that if a given query is changed in any way (to change the filter, return additional columns, or include a hint), the changed query will have a different query_id in Query Store. In which case, both the Waits Variation and Query Variation procedures will not compare the historical performance of the old query to the recent performance of the new one.

That being said, let’s look at the dbo.WaitsVariation procedure’s options.

Waits Variation Options

- @ServerIdentifier: Defaults to the current instance. Set this to gather data from another instance of SQL Server.

- @DatabaseName: Defaults to the current database. I’m querying data from WideWorldImporters for my examples.

- @ReportIndex: Default NULL. When used, stores information about the parameters used for the report.

- @ReportTable: Default NULL. Allows you to store the report data in a table like dbo.WaitsVariationStore, created by the installer.

- @WaitType: Which wait category are we concerned with? Total by default. [Total, CPU, Lock, Latch, BufferLatch, BufferIO, WorkerThread, NetworkIO, Parallelism…] There are 36 options here, so check the header of dbo.WaitsVariation for the full list.

- @Metric: Do we want to compare the total wait time for the query, or the average? Avg by default. [Avg, Total]

- @VariationType: Are we looking for queries that have improved [I] or regressed [R]. ‘R’ by default.

- @ResultsRowCount: Number of rows to include in the report. Only the largest regressions or improvements are included. Default is 25, and if this value is NULL or negative, the procedure uses the default.

- @RecentStartTime and @RecentEndTime: Defines what the ‘recent’ period is, which will be compared against the historical. The defaults are 1 hour ago for @RecentStartTime, and now for @RecentEndTime.

- @HistoryStartTime and @HistoryEndTime: Defines what the ‘history’ period is, which will be compared against the recent. The defaults are 30 days ago for @HistoryStartTime, and 1 hour ago for @HistoryEndTime.

- @IncludeQueryText: Includes the text for any identified queries in the output. Default is 0.

- @ExcludeAdhoc: Ignores ad hoc queries, anything that isn’t part of a procedure or other defined object. Default is 0.

- @ExcludeInternal: Excludes internal queries\operations run by SQL Server itself. Default is 0.

- @VerboseMode: Default 0. Provides the queries being used in the messages tab.

- @TestMode: Default 0. When enabled, does everything except actually run the generated queries to create the report.

- OUTPUT @ReportID: When you @ReportIndex and @ReportTable, a saved report is generated. You can then use the @ReportID to see that report separately.

So, these parameters are very similar to dbo.QueryVariation. You’ll likely want to alter your time parameters, and you could generate multiple reports to check different wait types. Or you could do a regular report for total waits, and review to see which type of wait had the biggest change.

Best Total Wait Improvement

Given that the default is to look for regressions instead of improvements, if I wanted to compare the last two day’s waits to the previous month, I could use the following:

DECLARE @RecentStartTime DATETIME2 = DATEADD(HOUR, -48, GETUTCDATE());

DECLARE @RecentEndTime DATETIME2 = GETUTCDATE();

DECLARE @HistoryStartTime DATETIME2 = DATEADD(DAY, -30, @RecentEndTime);

DECLARE @HistoryEndTime DATETIME2 = @RecentStartTime;

EXECUTE [dbo].[WaitsVariation]

@DatabaseName = 'WideWorldImporters',

@WaitType = 'Total',

@Metric = 'Avg',

@VariationType = 'I',

@ExcludeAdhoc = 1,

@HistoryStartTime = @HistoryStartTime,

@HistoryEndTime = @HistoryEndTime,

@RecentStartTime = @RecentStartTime,

@RecentEndTime = @RecentEndTime;

Choosing ‘Total’ as your @Metric may work for some cases, but would tend to give results with large variation. If a query executed more times in one period than the other, I would expect that period to have more waits.

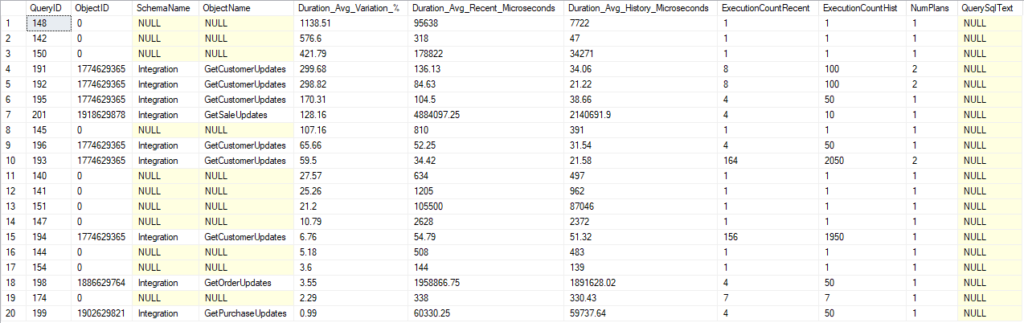

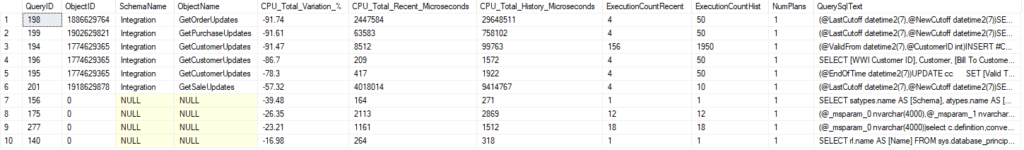

I ran a workload to generate some activity, and only one query in it regressed, so I’m returning improvements so there’s a little more to look at.

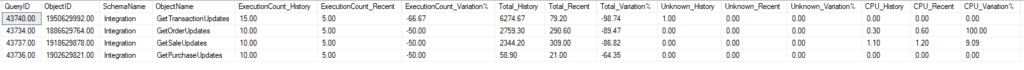

So, the four procs listed had some rather dramatic improvements. We can see the amount of the total wait reduction, and columns for unknown and CPU waits. This is only a portion of the incredibly wide result set. There are history, recent, and variation % columns for each wait type.

We ran this script looking for ‘Total’ as our @WaitType, which means we are sorting and returning the top rows with the most improvement in that area. But it did record the improvement for all wait types. It only takes a little scrolling to find our causes:

So, the first procedure had relatively large parallelism waits in its history, compared with none in the recent period. I would argue the variation % should be 100% not 0%, but the logic here may be special to prevent us from diving by 0.

All four procedures had NetworkIO waits. It’s likely the tool I was using to run these queries in a loop was not consuming the results fast enough, causing delays within SQL Server. These queries were taking so long that I used a smaller date range on my more recent calls. Fewer records, less time for the app to consume them, and smaller NetworkIO waits.

One thing I have noticed is that if you use @IncludeQueryText, the text returned is compressed and unreadable. Unless you create a saved report by using the @ReportIndex and @ReportTable options, which leads us to the second procedure.

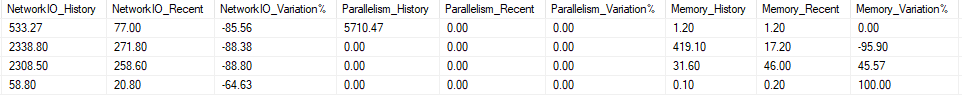

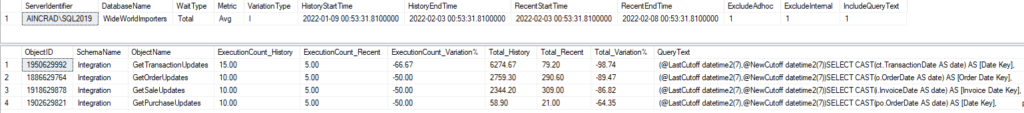

Waits Variation from Store

If I save the results, there are records in the tables indicated, I can run dbo.WaitsVariationFromStore to read out the header of the report and its details in two result sets.

The result set is much more limited, but focuses on the metric we chose. We can see the number of executions, the related object, the change in our totals waits, and the decompressed text for the query itself.

There are only three parameters for this proc, but here’s an example:

USE QDSToolBox

GO

EXECUTE dbo.WaitsVariationFromStore

@ReportID = 1,

@VerboseMode = 0,

@TestMode = 0;

Nearly done

There are two more components to the QDS Dashboard I want to detail, but I may be spreading them out between some other topics I want to blog on.

Time to go update slides for a user group meeting later this week.

If you have any topics related to performance in SQL Server you would like to hear more about, please feel free to make a suggestion. You can follow me on twitter (@sqljared) and contact me if you have questions.